Did you WannaCRY?

Friday, May 12, 2017, is one of the most important days of my career. It very well could have ended in complete disaster. However, thanks to our fundamental training in cyber security we escaped with minimal damage. Just before 3am that morning, the infamous ransomware cryptoworm "WannaCRY" was unleashed on the world. By the time I woke up my phone was already abuzz with a continuous stream of chicken littles predicting the end-times. I was obviously very confused and had a difficult time sifting through all the information to get to the bottom of what was going on. Most of the reports were coming out of Europe. I read them while I was going through my morning routine.

There were stories about how entire manufacturing plants, financial firms, and banks were going down and unable to transact business. Their computers were entirely unusable. Businesses from all different industries were affected. I hardly believed the numbers I was reading. Still very confused, I arrived at work and went straight to my office to continue to research what was going on. At the time I was the North American IT Director for a manufacturing plant in Dallas, TX. I did not particularly feel threatened that morning. That is because most of what I had been reading implied that Europe was the only area affected. I was mostly digging in out of curiosity than anything.

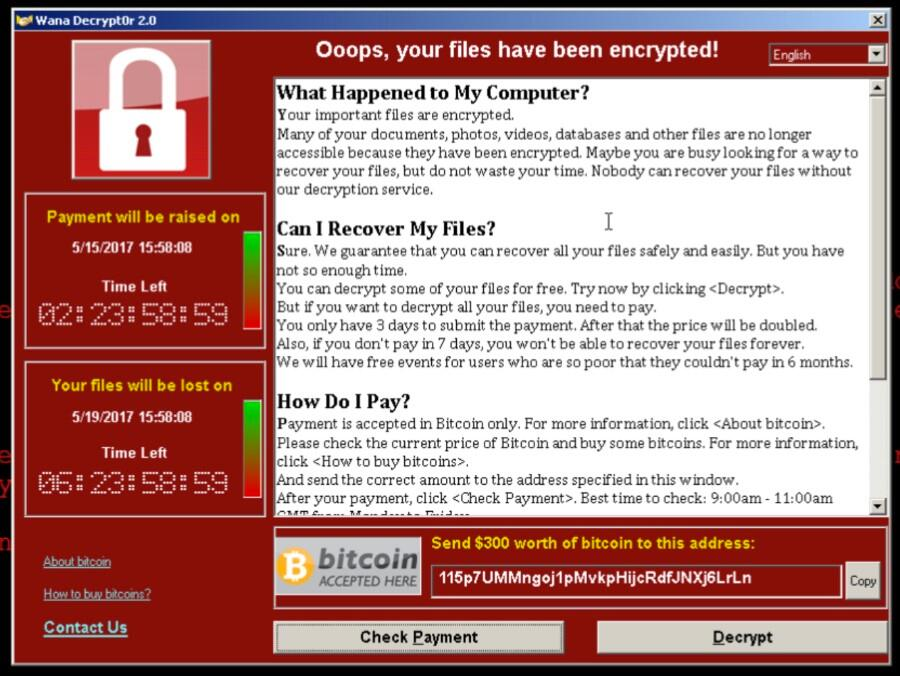

I was finally able to pull out the pertinent information. It appeared that victims would first start to experience their computers slow down, way down. Then after an hour or two, a window would pop up with a ransom note. The user would not be able to close this window or continue to use their computer at this point. Completely locked out.

When first infected, the computer devotes all of it's computational power to creating cyphers and encrypting all files on it's local hard disk. This is what causes the initial slow down of the victim machine. While it is doing this however, it is not immediately evident what is going on. Most of your files are still accessible and the computer, other than being slow, is still usable and responsive.

I was enthralled. I spent all of my time that morning reading about this new type of malware called ransomware. I had heard about ransomware before, but most discussions up until that point had been theoretical or laboratory experiments. My research into this new malware was interrupted by the Customer Service Manager in the office two doors down. Like most mornings she was coming to follow up on her ticket about her computer being slow. Also like most mornings she complained that it is worse than ever, and she can hardly get any work done. I assured her I would have a technician look into it as soon as he arrives. With that she left my office and I returned to my research.

By this time, it was still before business hours and our technicians had not arrived at the office yet. Before that could happen, my research was interrupted again, but this time by the VP of Operations. He was complaining about an issue on the manufacturing floor which had been reported the day before. He was expediting this support request and gave a very compelling argument which made it clear that nothing else was more important to the business than fixing his issue. At about the same time our technicians arrived to begin their workday. I dispatch them to the manufacturing floor to help the VP of Operations. I would wait to dispatch a technician to the Customer Service Manager until the issue on the plant floor was resolved. Again, I returned to my research.

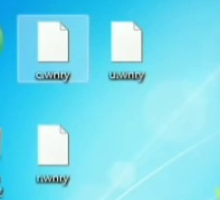

After about an hour, I can hear the Customer Service Manager on the phone in her office. It is usually just background noise and easy to tune out, but then she makes a comment that catches my attention. Something about these strange files showing up on her desktop. That was when I became suspicious. Earlier I had mentioned that it is not immediately evident when a computer has been infected other than just being slow. Well, there is one other indication that a computer is infected. As it reaches the end of its encryption process and just before it displays the ransom note, all the files are renamed with random letters and numbers.

Still convinced that the cryptoworm was isolated to Europe, I understood that if I was wrong the consequences would be too great. I sheepishly got up and walked to her office, remembering how I had completely ghosted her early request for a technician. I stood in her doorway waiting to be received. Once she finished her phone call, she addressed me. I asked her what she meant about strange files on her desktop. "Yeah" she said, "and now there is this weird program I cannot close out of.". She turned her computer screen around for me to see and sure enough, it was WannaCRY.

The binaries of the ransom note for the malware was actually titled "Wana Decryptor 2.0" which also contributed to the name. By the time this interaction occurred I was just beginning to understand how the virus worked and what vectors the cryptoworm took to infect a network. It would later be revealed that the exploits the worm uses were leaked from the NSA; EternalBlue and DoublePulsar. EternalBlue had just been leaked by the Shadow Brokers hacker group one month prior. Shadow Brokers likely knew about the exploit for some time before that and only chose to leak it to the general public after Microsoft patched it earlier that March. Even though Microsoft had patched the exploit, most organizations are not that up-to-speed with updating their computers.

The goal of the ransomware is to utilize cryptography to encrypt a victim's files. Then demand payment from the victim in order to receive the decryption key. The idea is that the victim obtains Bitcoin, an untraceable cryptocurrency, and use the Wana Decryptor program stuck on the computer to pay the criminals in exchange for this decryption key. Meanwhile the ransomware, also being a worm, goes into seek and destroy mode where it targets other systems within the network and executes its payload via the exploits described earlier.

I probably turned as white as a ghost when I saw her computer. My immediate thought was that the ransomware had just entered seek and destroy mode within the 40 seconds I had been standing in her doorway. My fundamental cyber security training kicked in and I knew I had to contain and isolate. Her computer was the vector and had to be contained. I walked over to her computer and unplugged it. I removed its network cable and yelled for one of the technicians who I knew was close by. I must have sounded panicked, because he came in wide eyed and asked what was wrong. I told him, "This computer has a worm, contain and isolate it, I am going to shut down the network." I did not know for sure if he was aware of all the news coming out of Europe that morning, but I did not have time to explain more. "Under no circumstances should this computer be connected to the network" I said.

After I felt confident enough that the vector was isolated, I knew I had to shut down the entire network for the building. I turned and sprinted to the small server room at the other end of the building. On my way I considered the repercussions of doing this. I knew that if I saved this business from total data annihilation, they would be none the wiser and I would likely receive heavy punishment for what would be seen as reckless vandalism. On the other hand, if I allowed the ransomware to wreak havoc on the company's data, the consequences would be far worse. Shutting down the network in the manner I intended would guarantee a three-hour black out even if we tried to restart the network immediately. Each hour would cost the plant tens of thousands of dollars.

I considered my most precious asset: the backup server. I figured that if the company lost everything except for the backup server, we could rebuild without falling victim to the ransom. My confidence in the backup server was shaky, as recent test results of this appliance were indeterminate. As I reached the server room, I had made up my mind. I would pull power from the backup server only, then go to the President's office, inform him about what was going on, and demand permission to take the network down.

I pulled the panel off the server cabinet where the backup server was, reached behind it and pulled the power plug. The server went dark, and I listened to its fans spin down. I then reached and unplugged its network cables. I did not want any well-intended technician to come in behind me and "fix" our backup server. I could not be for sure if the cryptoworm had delivered its payload to that server yet. I then briskly walked to the President's office.

In a rare moment, he was sitting there in his office at his desk resting his chin on his hand looking at his computer. Usually he was in a meeting, on the phone, or not in his office at all. He saw me and immediately welcomed me in. He always held an open-door policy and welcomed everyone warmly into his office if nothing else was going on. I then had to have the same conversation that other pitiable IT managers across the world were having, panicking and screaming the sky is falling. Realizing this and trying to keep my composure, I started to explain what was going on.

Albert Einstein said "If you cannot explain it simply, you do not understand it well enough." I have always believed that whole heartedly. The problem was, this issue we faced was so very new, I did not know it well enough to explain it simply to him. I did understand it enough to know the risk, and the mitigations. This is that fundamental training in cyber security I mentioned; Contain & Isolate. However, I knew he was not going to just let me shut down the plant without asking some hard questions. I was afraid I did not fully understand how to answer those questions yet. Besides, precious time was wasting. I asked him to walk with me to the server room while we discussed it.

As we walked, he continued to ask questions, and I answered them to the best of my ability, though I felt it was not enough. Once in the server room I pointed at a switch and informed him what that switch did. I explained my intentions and the consequences of a network blackout. I did not give him the opportunity to object and cut power to the entire network stack to the building. I told him I was sorry but that I would have more information for him in less than an hour. I requested he run interference with all the VPs which he agreed to. He did not seem to agree with my decision, but he also did not seem mad or frustrated.

The time was about 9:30am. Just then, two technicians arrived at the server room, supposedly to follow up on alerts that the backup server had gone down. I informed them that the network was shut down pending a cyber security threat investigation and to gather all IT personnel in the conference room. We contacted our Managed Service Provider who dispatched two teams, one to our plant location and another to our data center location to do a full inventory and further contain, isolate, and evaluate each server.

All servers would have a thorough inspection to determine if the worm payload had been delivered to it. One exception was the backup server which would remain locked out until all other servers had been evaluated. Since Microsoft had already identified and released a patch for this exploit two months prior, we patched the servers as they were verified as being cleaned. The servers were patched offline and re-introduced to an isolated network. This isolated network segment set up specifically for this recovery effort and would have nothing on it except for systems confirmed to be cleaned and patched. No internet was accessible to this network.

We did not escape unscathed. There were three servers infected with the cryptoworm. Two were important mission critical file servers, and a third was a server pending decommission. The server that was pending decommissioned was immediately scrapped. The backup server was then powered on in an isolated environment. It was inspected and verified. We experienced the best-case scenario with our backup server. It had already been patched from Microsoft. The patch had protected it from the cryptoworm's payload during the incident. Logs showed that during all the testing we had been performing on the appliance in the weeks prior, windows updates were installed manually ahead of schedule. It was such a relief. We assembled a team to begin the process of restoring the affected file servers from the backups.

By 4pm that afternoon, recovery efforts were well underway. 75% of our backend systems had been verified, patched and online in the isolated environment. Our desktop support team had already verified and patched over 80% of the client workstations in the plant. I finally had a moment to breath and returned to my desk to see what the latest news was on this worm. The news reports were grim. Institutions all over were going through similar experiences. FedEx was devastatingly affected. Banks, Universities, Hospitals were all affected. However, there was one brilliant story emerging. About an hour earlier, at approximately 3pm, a 22-year-old British security researcher, Marcus Hutchins, accidentally discovered a global kill switch for the cryptoworm. This kill switch could not do much for systems that were already infected and encrypted, but it prevented further spreading of the worm from taking place.

With the news that the ransomware was no longer a threat, the network isolation was lifted, and a team was formed to bring all of our systems back online. Three hours later, after 8 hours of total down time, the plant was operational again. This outage still cost the company hundreds of thousands but was the best possible outcome once the worm entered the network. The initial vector for our particular scenario was a phishing email that was received by the Customer Service Manager early that morning at 2:30am.

In the following days there were some lingering effects from the outage. Things like IP assignments out of whack, switch reboots needed here and there, missing files. All the usual detritus to be expected from such a network disruption. All this had happened on a Friday. My team and I worked through the weekend to ensure everything was ready to go on Monday. In a meeting first thing that Monday the President, the VPs and myself had a difficult discussion about what had occurred.

The VPs were grumbling and upset about the lost productivity, not fully understanding the crisis we avoided. This was understandable and I did my best to explain the circumstances we had faced. This didn't necessarily help because their argument was how we had made a huge investment in cyber security, monitoring, and antivirus systems in the organization. I explained to them the nature of the cryptoworm and how new this type of malware was. It helped that they had all heard the news of several companies facing multi-day outages due to the same issues. I continued to smile as I defended and praised my team knowing it was a job well done.

This incident was a defining moment in my career. In the aftermath, I started to hone my skills in Site Reliability tactics and Operational Incident Management. As a company we started to adopt some processes and procedures to help us address and deal with emergencies such as this in the future. Out of those policies I started to craft a post-mortem process for incidents which I am happy to share with you.